Storing and processing CAE documents in XML format is a dominating archival solution because it is in full compliance with the requirements of networked economy.

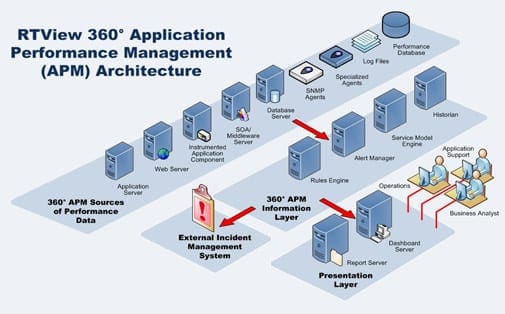

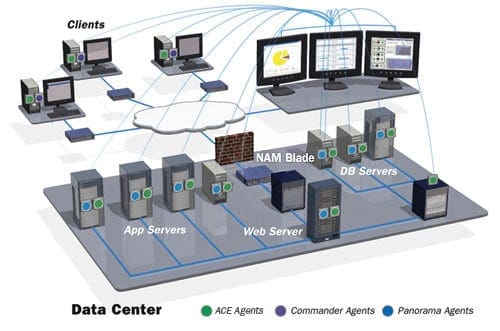

The chief challenge in networked economy today is the integration of all available documents into one unified computational environment that works in the Web information space. The Web-driven CAE system is an n-tier architecture where distributed computers with different run-time environment and engineering software communicate with each other by exchanging XML documents. Significant policy in enterprise integration is to present the documents in XML form.

Converting conventional documents into XML document. Software agents need to access engineering documents that were built in different nodes for use in unanticipated contexts.

The first step for the designers is to mark up manually various conventional documents, database tables, messages from mobile devices and sensors, etc. Using these mark-ups, the information Web services and activated agents can interpret and reason with the information as shown in Figure 4. Establishing semantics over the marked-up information pathways plays a key role for numerous engineering tasks such as comparing models to understand the semantic differences, mapping terms and entities to ensure product data integration, or simply reusing entities and queries to product data to prevent duplication of work.

XML-based languages in CAE. Engineering societies in all areas create XML variants of the design and manufacturing languages. Here are the dominating in the mechanical and electronic industry:

- STEP (Standard for Exchange of Product model data) provides a mechanism that is capable of describing product data throughout the life cycle of a product, independent from any particular system. The nature of this description makes it suitable not only for neutral file exchange, but also as a basis for implementing and sharing product databases and archiving;

- The Process Specification Language (PSL) defines a neutral representation for manufacturing processes that supports automated reasoning. Process data is used throughout the life cycle of a product, from early indications of manufacturing process flagged during design, through process planning, validation, production scheduling and control.

- The Systems Modeling Language (SML) is a general-purpose graphical modeling language for specifying, analyzing, designing, and verifying complex systems that may include hardware, software, information, personnel, procedures, and facilities. In particular, the language provides graphical representations with a semantic foundation for modeling system requirements, behavior, structure, and parametric, which is used to integrate with other engineering analysis models.

- Electronic Business XML provides an open, XML-based infrastructure that enables the global use of electronic business information in an interoperable, secure, and consistent manner by all trading partners. The ebXML architecture is a unique set of concepts; part theoretical and part implemented in the existing ebXML standards work.

- Mathematical Markup Language (MathML) has been designed to encode mathematical material suitable for teaching and scientific communication at all levels providing both mathematical notation and mathematical meaning. It facilitates conversion to and from other math formats, both presentational and semantic and allows the passing of information intended for specific renderers and applications. It also supports efficient browsing for lengthy expressions and it is suited to template and other math editing techniques. The MathML is human legible and simple for software to generate and process.

- Materials Markup Language enhance the e-business needs of materials producers, designers, fabricators, quality assurance, and failure analysis specialists by developing an XML-based standard markup language for the exchange of all types of materials technical information, while retaining its high quality and integrity despite its complexity.